The Power of Micro-LLMs and Small LLMs in Enterprise AI Solutions

In the developing AI landscape, enterprises are embracing Micro-LLMs and Small LLMs in an attempt to boost productivity, cut costs, and maximize insights in every possible way. These models provide domain-specific precision, thus enhancing accuracy in specific fields like finance, healthcare, and legal sectors. By focusing on specialized tasks, Micro-LLMs and Small LLMs reduce inaccuracies and irrelevant responses and thereby provide more relevant outcomes.

These models have been interactive with a user’s interest because they are personally relevant and contextually apt. For instance, in answering Frequently asked questions and giving end-to-end support, levels of customer satisfaction and retention improve.

Base models, including Llama 2 and GPT-3, serve as a starting point or precursor for building further specialized models. Such models can be adapted using domain-specific labeled data to design better, more contextually aware models.

What are Micro-LLMs and Small LLMs?

The Small LLMs and Micro-LLMs are side streams out of these significant model architectures. For instance, GPT and BERT are behemoths with up to a trillion parameters, whereas Micro-LLMs generally operate on 100 million or fewer parameters, while Small LLMs possess under 5 billion parameters.

Being designed in this way, Micro-LLMs and Small LLMs minimize the amount of high computational power and specialized hardware used. This allows them to perform a wide range of tasks without impairing performance or accuracy. In consequence, this efficiency allows them to reach even bigger models in targeted applications and improve their practicality. Making them applicable in scenarios with a lower number of hardware resources, such as mobile devices, IoT, and edge computing environments. In such environments, low latency and efficient use of resources are very crucial.

Domain-Specific Precision

The primary advantage of Micro-LLMs and Small LLMs is that they provide domain-specific accuracy. By fine-tuning such models on industry-specific data, the enterprises can achieve more accurate and relevant interactions. For example, the customer interaction, product manuals, and FAQs in the IT sector can be fed into Micro-LLM for training.

This enables it to diagnose ordinary issues and also guide users step by step in solving an issue. With this massive dataset, the Micro-LLM can respond promptly, appropriately, and relevantly hence providing good value to the end-users and saving much time since it does not involve human intervention. This model, therefore, streamlines IT support which is effective and increases customer satisfaction. Released resources from routine, basic queries indeed translate to complex and high-value tasks are more effectively performed-this is gold in IT operations.

Enhanced Security and Privacy

Micro-LLMs and Small LLMs ensure better security and privacy since they are small, hence easier to manage. Implementing them within on-prem or even in private cloud environments minimizes the risk of data leakage. Industries dealing in finance, health care, etc., consider it one of the most important values for their data confidentiality. Localizing minimizes the enterprises’ need to send data to third-party models, keeping critical information secure and in line with tight regulatory requirements.

Operational Efficiency

The operational efficiency of Micro-LLMs and Small LLMs is a big advantage as it has a streamlined architecture that enables rapid development cycles; thus, data scientists can rapidly iterate improvements and adapt to new data trends or organisational requirements.

Additionally, easier interpretability and debugging of the model are supported due to the simplified pathways of decision and smaller parameter space. Moreover, companies can easily integrate the models into their existing toolchains to enhance overall efficiency in enterprise workflows.

Practical Applications in Enterprises

Employee Self-Service

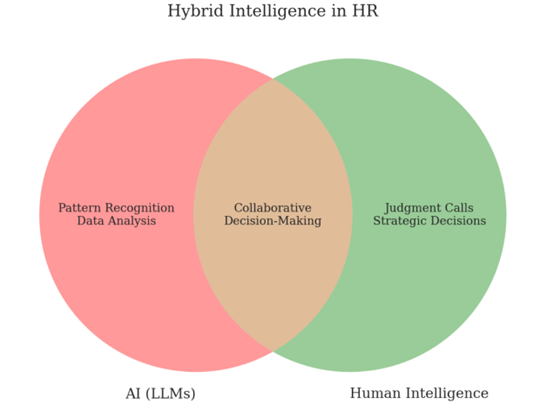

Micro-LLMs can also help employees with HR-related question such as paid time off days for parental leave. Micro-LLMs will ingest the HR policy document, provide accurate, personalized answers, and reduce failed queries by improving employee satisfaction. An HR-focused Micro-LLM helps employees know the policies, their benefits, and compliance requirements, putting less pressure on the HR department thereby improving work experience overall.

Customer Service Automation

Micro-LLMs use AI assistant capabilities to enable natural and interactive conversations, address generic enquiries, and provide end-to-end service in customer service. For example, an LLM-enrolled chatbot can remove unqualified leads from prospect lists so that the selling is more focused and directed.

A customer service-focused Micro-LLM can solve the most basic issues of account resets or product malfunction, thereby leading to increased first-call resolution as well as fewer interactions with humans.

Enhanced User Engagement

Micro-LLMs and Small LLMs will interact with the user much more with novel datasets and customer engagement platforms. For customer service, they handle mundane complaints, offer end-to-end support, and present customized responses; this boosts customer satisfaction and retention rates.

They also automate menial tasks, whereby there is ample time for human agents to deal with intricate problems and therefore boosts efficiency and reduces the waiting time. This makes the interaction experience with customers smooth and efficient.

Personalization in Customer Support

Customer service: Micro-LLMs automate the mundane issues so that the critical matters can be referred to human agents. This will help personalize quicker, relevant answers, which will increase the satisfaction and retention levels for customers. Customer experience Micro-LLMs provide customers with immediate questions to common questions, thus improving their experience .

Personalization in Healthcare

It will therefore also be essential in the health space, where tiny domain-specific LLMs will aid clinicians in understanding complicated medical jargon. Of course, it follows that such models scrutinize patient histories and medical literature to design treatment plans and help with diagnostics on-the-go.

Moreover, such specialized training further improves the precision of medical interactions: fewer errors and better patient outcomes. Meanwhile, streamlined clinical workflows permit healthcare professionals to spend more time on value-adding tasks and enhance both patient care and patient satisfaction. Secondly, Small LLM can be incorporated in healthcare systems to make more effective and timely decisions and lead to better patient outcomes.

Cost-Effectiveness

Training massive, general-purpose models is expensive, costing $4 million to $10 million or more. Compared with Micro-LLMs and Small LLMs require less computing power and specific equipment, so it is now possible for companies with budgets. The lower computational power makes it possible to deploy it on low hardware equipment and also conserves cloud resources which means saving on cloud resource utilization. As such, efficiency makes AI accessible and affordable because companies are not required to purchase costly hardware or hire high-paid data scientists.

Deployment and Resource Efficiency

Micro-LLMs and small LLMs may be deployed on-premises or inside private clouds, cutting down the expenses related to cloud services and specialized hardware. The size and low training requirements for smaller LLM models reduce costs on enterprises. Thus, AI solutions developed by using these models are cost-effective, efficient, and resource-agile under different kinds of resource constraints, making them perfect for applications in budget-constrained businesses.

Efficiency in Processing

Micro-LLMs and Small LLMs consume fewer computational units. It thus has a faster processing ability. Therefore, efficiency would be the way towards real-time applications as well as resource-constrained environments. Latency is reduced for smaller models, making AI customer service and real-time data analysis adaptable. This makes overall operational costs cheaper, thus drawing businesses that are trying to save on their AI expenses.

Financial and Accounting

Micro-LLMs enhance the audit process efficiency in finance and accounting by answering questions from auditors and reducing hours spent gathering information. This enables organizations to reduce their external auditing costs. A finance-focused Micro-LLM can also compute intricate interest rates and lease liabilities. This contributes to SEC reporting and financial disclosure. It can also analyze real-time market data and detect fraud, interpreting financial transactions and identifying anomalies that reduce financial risk and enhance regulatory compliance.

Transcribing Customer Service Calls

The micro-LLMs will be able to summarize customer service calls by giving transcripts that can obviate the need for manual note-taking from agents. This implies increasing efficiency and reducing ROI for such tasks. However, enterprises that analyze their customer interactions can find trends and areas of improvement in their customer services. Thus, a data-driven approach helps in better customer satisfaction and loyalty.

Cost-Effectiveness and Resource Efficiency

Both Micro-LLMs and Small LLMs are smaller models, which lead to lower computational and financial costs. This means that smaller enterprises or specific departments within large companies can train, deploy, and maintain these models using fewer resources.

Adaptive and Responsive Capabilities

Micro-LLMs and Small LLMs provide agility and responsiveness, fundamentally required in real-time applications. They are smaller in size, henceforth of lower latency in processing the request, and find suitability in speed-critical applications. The ability to have adaptive model training avails easier and faster updates, so the models can remain effective over time.

Challenges and Considerations

While there are many strong points to be argued for Micro-LLMs and Small LLMs, there are also several challenges that need to be considered by the enterprises

Data Sourcing and Integration in Micro-LLMs and Small LLMs

One of the main challenges lies in proper data sourcing that is both accurate and diverse. As a requirement, data for Micro-LLMs and Small LLMs must be relevant and of high quality. Enterprises need to minimize redundancy in data while ensuring diverse source data.

For example, a Micro-LLM focused on the legal domain would require training on an enormous amount of available legal documents, case laws, and regulations. Therefore, those responsible must take due care in ensuring the quality and relevance of data in terms of accuracy and effectiveness.

Technical and Skill Constraints

The technical and skill challenges with deploying Micro-LLMs and Small LLMs are substantially daunting. Data encryption and data masking are minimum requirements to safeguard all such sensitive information. There also may be a shortage of skills in LLM expertise, which could prevent effective deployment. Investment in training programs is thus necessary to fill these gaps. Specialized hardware and software capabilities also are required for the deployment of such models.

Arm Neoverse-based server processors provide much-needed performance for Micro-LLMs and Small LLMs on commodity hardware. In addition, the V2 Neoverse delivers double-digit performance improvement for cloud infrastructure workloads and triple-digit performance gains for ML and HPC workloads over prior generations.

Frameworks such as llama.cpp improve the performance of models, and that is what brings this model within reach and relatively cheap. Paying special attention to optimizing performance for CPU inference, llama.cpp enables LLMs to be run much faster and more efficiently on commodity hardware compared to general-purpose frameworks such as PyTorch.

Bias Mitigation

Micro-LLMs and Small LLMs are likely to inherit biases from the training data that will bring wrong results. So, enterprises need to ensure that these models are trained on unbiased data and verified predictions against the real enterprise data so that it is correct.

Therefore, a periodical auditing and testing of the model are in order to identify and counter the biases. Continuous monitoring and feedback loops will steadily refine the models over time.

Future of Micro-LLMs and Small LLMs

As companies continue to navigate the complexities of generative AI, Micro-LLMs and Small LLMs are proving themselves as early and promising solutions for balancing capability with practicality. Therefore, these models represent a significant development in the history of AI and are ready to help enterprises adopt the power of AI in a controlled, efficient, and customized manner.

Ongoing improvements and advancements in Micro-LLM and Small LLM technology will shape the future landscape of enterprise AI solutions. Arm’s efficient CPU technology and specialized frameworks like llama.cpp are making it easier to deploy these models with enhanced accessibility and power.

Real-World Implementation of Micro-LLMs and Small LLMs

Financial Services

Micro-LLMs can be designed to capture even the smallest terms, regulatory overviews, and market forces that comprise finance. These could revolutionize how financial institutions monitor risks, maintain compliance with regulations, and communicate with clients. For example, a finance-specific Micro-LLM could help perform real-time analysis of markets, fraud recognition, and auto-reporting.

Thus, these models provide personalized financial advice, customer service, and workflow automation through integration with proprietary trading platforms, CRM systems, and customer databases. Such a level of customization enables financial operations to become more effective and efficient and leads to generating real business value.

Healthcare

Applications of Micro-LLMs will include assisting doctors with the understanding of complex medical terminologies to arrive at accurate diagnoses. These models analyze the history of patients and medical literature thus capable enough to bring out certain personalization to improve patients’ care and streamline clinical workflows.

For instance, a health care-focused Micro-LLM can assist in patient registration, medical coding, as well as insurance claims processing. Such data could be processed automatically, and the health care organizations will be at liberty to concentrate on offering better care to patients with better results and increased satisfaction.

Conclusion

In summary, Micro-LLMs and Small LLMs are revolutionizing how enterprises employ AI. Micro-LLMs and Small LLMs increase domain-specific precision for accuracy in specific industries.

These models offer tailored efficiency, reducing inaccuracies and irrelevant responses. Micro-LLMs and Small LLMs enhance user engagement through personalized and relevant interactions. They improve customer satisfaction and retention rates.